Browser agent bot detection is about to change

How we built Browser Use to stay undetectable

Let me save you months of searching for providers and solutions for using the web at scale: your cloud browser provider's "magic stealth mode" is detectable in under 50 milliseconds.

Playwright with stealth plugins. "Stealth" cloud browser providers. Some guy on Discord saying his Selenium fork "is undetectable." None of that works.

And before you say "but it works on my machine with Google Chrome", congrats 👏. Your personal Chrome instance with a real fingerprint, headful browser, real hardware, and a legit whitelisted residential IP does not get blocked. Shocking. Who would have thought.

We're talking about automation at scale. Thousands of concurrent sessions. Millions of requests.

That's where everything breaks. Here's why, and what we built instead.

The cat & mouse game is about to get harder

Right now, antibot systems (Akamai, Cloudflare, DataDome, and pretty much all of them) can detect far more than they actually block. They're playing it safe. Why? Because blocking a real customer is worse than letting a bot through. False positives kill conversion rates. So they set their thresholds conservatively.

This is the only reason most automation still works.

But here's what's changing: AI agents are about to flood the web. Agentic browsers, autonomous assistants, automated workflows. The volume of non-human traffic is about to explode (even more). And antibot vendors are watching.

When bot traffic reaches a tipping point, the cost of letting bots through starts exceeding the cost of occasionally blocking humans. And when that happens, they'll tighten the screws.

Detection methods that are currently "monitor only" will become "block." Thresholds will drop. The cat & mouse game that scrapers have been winning for years? It's about to get a lot harder.

The tools that work today won't work tomorrow. JavaScript patches, stealth plugins, CDP hacks. They're already detectable. Antibots just haven't flipped the switch yet.

We're building for that future. A browser that's undetectable not because antibots are being lenient, but because there's genuinely nothing to detect.

So we did something different: we forked Chromium

At Browser Use, we maintain a custom Chromium fork built specifically for undetectable automation.

Actual modifications to the Chromium source code. Currently tracking the latest major Chromium version, with dozens of patches that address detection vectors at C++ and OS level.

When our browser says navigator.webdriver === false, it's not because JavaScript lied about it. It's because the value was never true in the first place.

Every function still returns [native code] when stringified. Every prototype chain is intact. Every iframe and Worker reports consistent values.

Because we didn't override anything. We changed what the browser actually does.

Running fully headless, we bypass all major antibot systems: Cloudflare, DataDome, Kasada, Akamai, PerimeterX, Shape Security. You name it. Not with hacks. Not with prayers. With a browser that was engineered to be undetectable.

Not everything is in the browser...

Here's what most "stealth" solutions miss: JavaScript fingerprinting is just one layer.

Modern antibot systems don't just check navigator.webdriver. They cross-reference everything:

- IP reputation: Is this a datacenter IP? A known proxy? A residential address?

- Timezone & locale: Does your reported timezone match your IP's geolocation?

- Hardware consistency: Does your GPU, audio hardware, and screen resolution make sense together?

- API availability: Are the APIs present that should exist on your reported OS and browser version?

- Behavioral signals: Mouse movements, scroll patterns, typing cadence

They correlate all of this. Your browser says Windows, but your GPU is SwiftShader? Flagged. Your timezone says New York, but your IP is in Frankfurt? Flagged. You claim macOS but you're missing APIs that every Mac has? Flagged.

We cover all of it.

Our stack isn't just a browser. It's a complete solution:

- Chromium fork for JavaScript fingerprint consistency

- Proxy infrastructure with residential IPs and proper geolocation

- Timezone and locale injection matched to your exit IP

- Behavioral layer for human-like interaction patterns

Every signal, cross-referenced and consistent.

...and not everything is about stealth

Not every patch is about evading detection.

A lot of our Chromium work is about making browsers and AI agents work better together.

When you're running thousands of concurrent browsers, inefficiencies add up fast. So we optimized aggressively: compositor throttling, feature stripping, V8 memory tuning, CDP message optimization, smart caching layers.

Here's a cool example of something competitors skip entirely: profile encryption that actually works across machines. Most providers just slap --password-store=basic on their Chrome flags and call it a day. That disables encryption entirely. Your saved credentials, cookies, session data? Stored in plaintext. We patched profile encryption to remain secure while still being portable across infrastructure. Your customers' data stays protected.

This isn't just about running headless. It's about building a browser designed for agents, not humans.

The result: More browsers per machine. Lower infrastructure costs. Faster cold starts. Better agent performance.

Real-world fingerprints instead of Linux everywhere

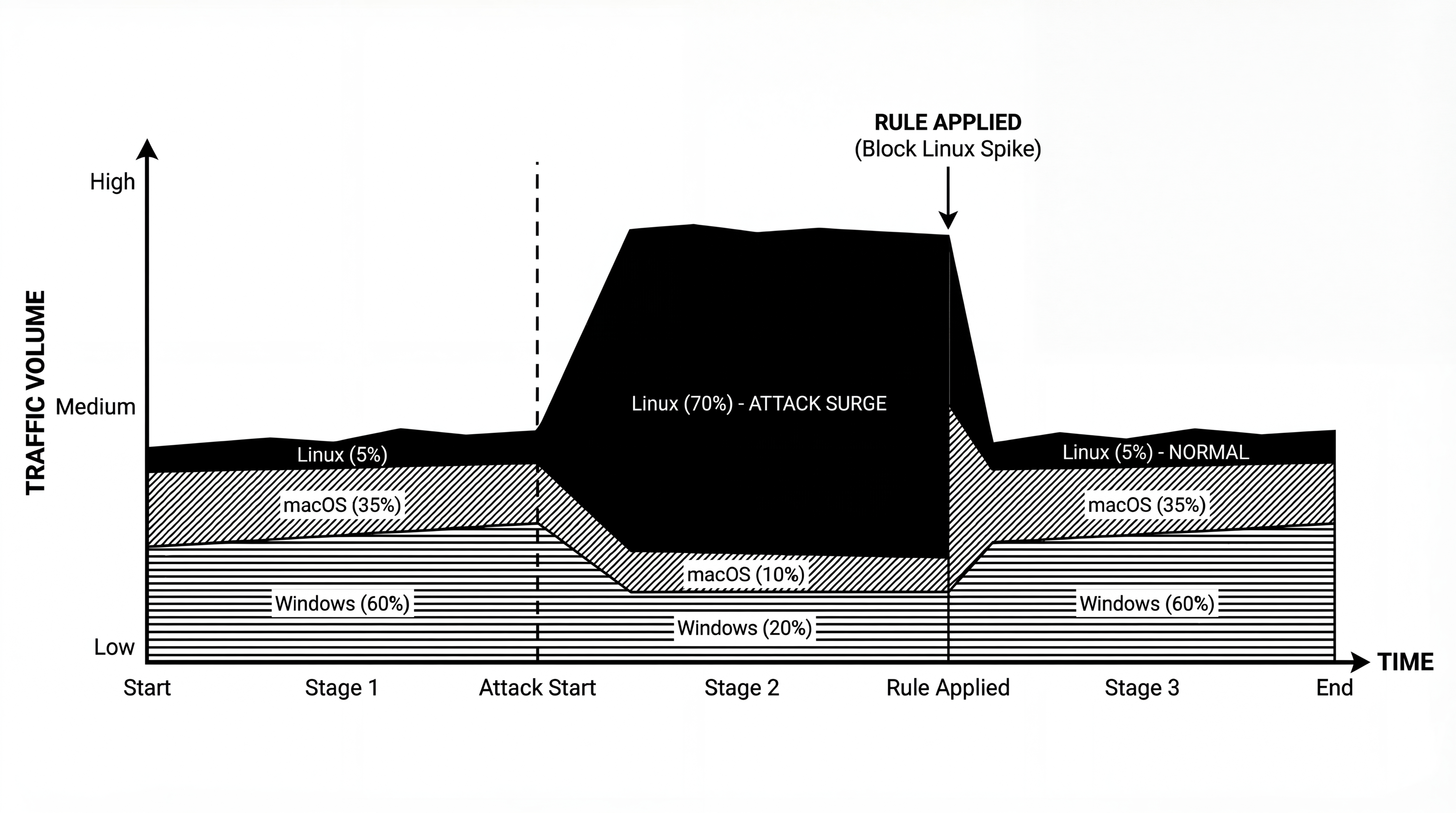

Our competitors run everything on Linux and hope nobody notices.

Linux desktop represents less than 5% of global web traffic.

And when they try to fake Windows or macOS fingerprints? They fail hard. Missing APIs. Wrong audio configurations. Inconsistent GPU reports. The mismatch is obvious to anyone looking. They're lucky that most antibots haven't cranked up their detection thresholds yet. When that changes, their whole approach falls apart.

When your automation fleet is 100% Linux hitting a consumer website, you've already failed. Antibot systems know that real humans use:

- Windows: ~60% of desktop traffic

- macOS: ~35% of desktop traffic

- Linux: ~5% of desktop traffic

And it gets worse. Major antibot systems use AI to analyze traffic patterns when they detect unusual activity. They look for shared fingerprint signatures across requests. When they spot a pattern, they don't just block individual sessions. They apply temporal rules that block entire networks and IP ranges. If your fleet shares the same Linux fingerprint pattern, one bad actor using the same provider can get your whole operation flagged.

At scale, you simply can't use Linux-only fingerprints.

In-house CAPTCHA solving

CAPTCHAs are one of the biggest blockers for agent automation.

We built our own CAPTCHA solving infrastructure with in-house models. No third-party APIs. No external dependencies. Everything runs on our stack.

Currently supported:

- Cloudflare Turnstile

- PerimeterX Click & Hold

- reCAPTCHA

More coming soon.

Because it's all in-house, we include automatic CAPTCHA solving for free for all Browser Use customers. No per-solve fees. No usage limits. When your agent hits a challenge, we handle it automatically.

Good fingerprinting means fewer CAPTCHAs in the first place. When your browser looks legitimate, websites don't challenge it as often. Our fingerprint consistency reduces CAPTCHA rates significantly before solving even becomes necessary.

My prediction? CAPTCHAs don't really make sense anymore. Modern solvers consistently outperform humans in both speed and accuracy. And they harm conversion rates and user experience for legitimate visitors. They'll fade away as a detection method.

What's next

This is the first post in a series where we'll go deep on browser automation and anti-detection. Upcoming topics:

- How our in-house CAPTCHA solving works

- Competitor benchmarks

- Deep technical analysis of antibot systems

- How Browser Use skills work

- How our infrastructure is deployed at scale

If you're building with browser automation and running into stealth issues, or just want to chat about the space, reach out on X @reformedot or email me.

Aitor Mato

Author